The Unicode Sandwich

Explaining The Unicode Sandwich: The Current Best Practice to Manipulate String and Text Files in Python!

After dealing with Sequences and Dictionaries, today we are talking about a little boring but albeit very important subject of Unicode, Encoding and Decoding Strings. You might think that understanding Unicode is not important. However, there is no escaping the str versus byte divide.

The Unicode Dilemma

Unicode provides multiple ways of representing some characters, so normalizing is a prerequisite to solve any problem that deals with text. In this article we will visit the following topics:

- Characters, code points, and byte representations.

- Avoiding and dealing with encoding errors.

- Best practices when handling text files.

- The default encoding trap and standard I/O issues.

- Safe Unicode text comparisons with normalization.

- Proper sorting of Unicode text with locale and the pyuca library.

Character Issues

The big elephant in the room is the Definition of a character.

What is a character? There are multiple definitions:

- A single visual object used to represent text.

- A single unit of information.

- A Unicode character, as of 2021 this is the best definition of a “character” we have.

The Unicode standard explicitly separates the Identity of characters from their byte representations:

- The identity of a character is a Code Point, it’s a number from 0 to 1,114,111 (base 10), shown in the Unicode standard as 4 to 6 hex digits with a “U+” prefix. For example: letter A is U+0041.

- The actual bytes or the byte representation is an output of the Encoding algorithm that was used to convert the Code Point to byte sequences. For Example: A (U+0041) is encoded as the single byte \x41

in the UTF-8 encoding.

One last thing to mention, converting from code points to bytes is Encoding and converting from bytes to code points is Decoding.

s = 'café'

b = s.encode('utf8')

print(b)

# 'caf\xc3\xa9'

# The code point for “é” is encoded as two bytes in UTF-8

s_decoded = b.decode('utf8')

print(s_decoded)

# 'café'Byte Essentials

There are 2 Built in types for binary sequences:

- The immutable bytes type.

- The mutable bytearray type.

Each item in bytes or bytearray is an integer from 0 to 255.

cafe = bytes('café', encoding='utf_8')

print(cafe)

# b'caf\xc3\xa9'

print(cafe[0])

# 99

cafe_arr = bytearray(cafe)

print(cafe_arr)

# bytearray(b'caf\xc3\xa9')Although binary sequences are really sequences of Integers, their literal notation reflects the fact that ASCII text is often embedded in them:

- Bytes with decimal codes 32 to 126: the ASCII character itself is used.

- Bytes corresponding to tab, newline, carriage return, and “\” :the escape

sequences \t, \n, \r, and \\ are used. - If both string delimiters ‘ and “ appear in the byte sequence, the whole sequence is delimited by ‘, and any ‘ inside are escaped as \’ .

- For other byte values, an hexadecimal escape sequence is used (\x00 is the null byte)

This is why we see (b’caf\xc3\xa9'): the first three bytes b’caf’ are

in the printable ASCII range, the last two are not.

We got a little bit technical here, but there is one last thing to mention, Both bytes and bytearray support every str method except those that do formatting (format, format_map) and those that depend on Unicode data.

Here’s an example of the same String encoded in different encodings:

for codec in ['latin_1', 'utf_8', 'utf_16']:

print(codec, 'El Niño'.encode(codec), sep='\t')

# Same sentence El Niño encoded in latin_1, utf_8 and utf_16

# latin_1 b'El Ni\xf1o'

# utf_8 b'El Ni\xc3\xb1o'

# utf_16 b'\xff\xfeE\x00l\x00 \x00N\x00i\x00\xf1\x00o\x00'You can find the full list for codecs in Python Codec registry.

Understanding Encode and Decode Problems

Sometime when we try to encode a string or decode a binary sequence using the wrong encoding, we encounter the UnicodeError Exception, such as:

- UnicodeEncodeError: when converting str to binary.

sequences. - UnicodeDecodeError: when reading binary sequences into str.

There is no specific way to deal with this other than to find out the correct encoding of the byte sequence, this chardet Python Package can help, it has more than 30 supported encodings, but overall it’s still guess work.

To cope with the errors and the exception, you could either replace or ignore them, and you deal with them depending on your app context.

city = 'São Paulo'

print(city.encode('utf_8'))

# b'S\xc3\xa3o Paulo'

print(city.encode('cp437'))

# UnicodeEncodeError: 'charmap' codec can't encode character '\xe3'

print(city.encode('cp437', errors='ignore'))

# b'So Paulo'

print(city.encode('cp437', errors='replace'))

# b'S?o Paulo'

octets = b'Montr\xe9al'

print(octets.decode('cp1252'))

# 'Montréal'

print(octets.decode('koi8_r'))

# 'MontrИal'

print(octets.decode('utf_8'))

# UnicodeDecodeError: 'utf-8' codec can't decode byte 0xe9 in position 5

print(octets.decode('utf_8', errors='replace'))

# 'Montr�al'Loading Modules with Unexpected Encoding

Anyone who has tried to load a file in Python probably encountered this at least once in his programming or engineering career: SyntaxError: Non-UTF-8 code.

If you load a .py module containing non UTF-8 data and no encoding declaration you will get the error above, a likely scenario is opening a .py file created on Windows with cp1252 codec.

To solve this we just add a magic coding comment at the top of the file:

# coding: cp1252

print('Olá, Mundo!')

# Olá, Mundo!Handling Text Files

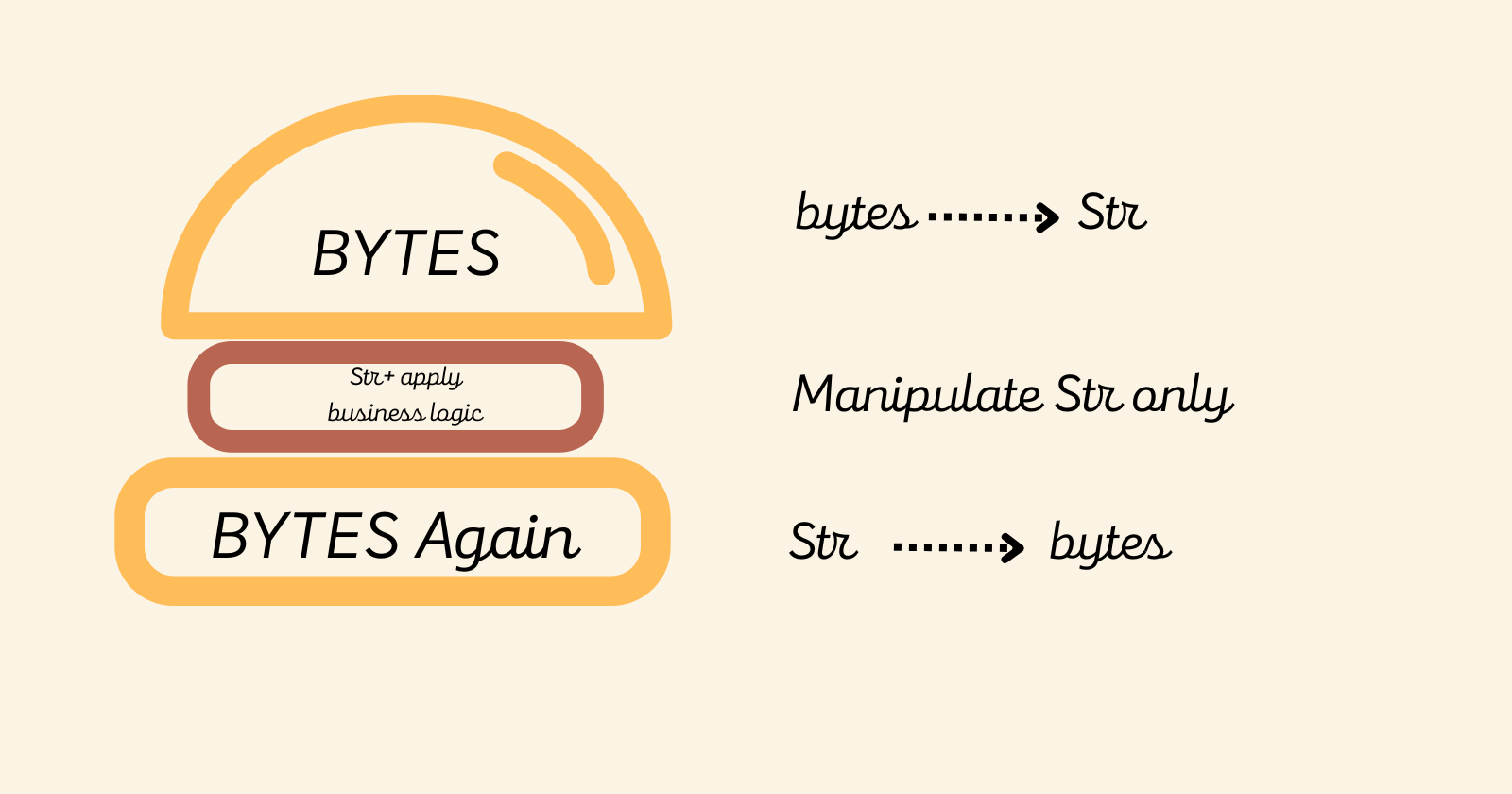

The best practice for handling text I/O is the “Unicode sandwich”:

- Decode to str as soon as possible.

- Apply your business logic.

- Encode back to bytes as late as possible.

To make sure that we do not encounter any exceptions, we should always specify the encoding.

fp = open('cafe.txt', 'w', encoding='utf_8')

print(fp)

# <_io.TextIOWrapper name='cafe.txt' mode='w' encoding='utf_8'>

fp2 = open('cafe.txt')

print(fp2)

# <_io.TextIOWrapper name='cafe.txt' mode='r' encoding='cp1252'>

print(fp2.encoding)

# cp1252Normalizing Unicode as a Solution

String manipulation is complicated by the fact that Unicode has combining characters such as diacritics and other marks that attach to the preceding character.

For example, the word “café” may be composed in two ways:

s1 = 'café'

s2 = 'cafe\N{COMBINING ACUTE ACCENT}'

print(s1, s2)

# ('café', 'café')

print(len(s1), len(s2))

# (4, 5)

print(s1 == s2)

# False The solution is to use the unicodedata.normalize() function.

The first argument to this function is one of four Unicode Normalization Forms

- Normalization Form C (NFC) composes the code points to produce the shortest equivalent string.

- Normalization Form D (NFD) decomposes the code points, expanding composed characters into base characters and separate combining characters.

- Normalization Form KC (NFKC) is the same as NFC only stronger affecting compatibility characters.

- Normalization Form KD (NFKD) is the same as NFD only stronger affecting compatibility characters.

Unicode Normalization Forms are formally defined normalizations of Unicode Strings which make it possible to determine whether any two Unicode strings are equivalent to each other.

s1 = 'café'

s2 = 'cafe\N{COMBINING ACUTE ACCENT}'

print(len(normalize('NFC', s1)), len(normalize('NFC', s2)))

# (4, 4)

print(len(normalize('NFD', s1)), len(normalize('NFD', s2)))

# (5, 5)

print(normalize('NFC', s1) == normalize('NFC', s2))

# True

print(normalize('NFD', s1) == normalize('NFD', s2))

# TrueSorting Unicode Text Problem

Python sorts strings by comparing the code points. Unfortunately, this produces unacceptable results for anyone who uses non-ASCII characters, more specifically, different locales have different sorting rules.

The standard way to sort non-ASCII text in Python is to use the locale.strxfrm function which, according to the locale module docs, transforms a string to one that can be used in locale-aware comparisons.

import locale

# set locale to Portuguese with UTF-8 encoding

my_locale = locale.setlocale(locale.LC_COLLATE, 'pt_BR.UTF-8')

fruits = ['caju', 'atemoia', 'cajá', 'açaí', 'acerola']

sorted_fruits = sorted(fruits, key=locale.strxfrm)

print(sorted_fruits)

# ['açaí', 'acerola', 'atemoia', 'cajá', 'caju']There are few caveats though:

- The locale must be installed on the OS, otherwise setlocale raises a

locale.Error: unsupported locale setting exception. - The locale must be correctly implemented by the makers of the OS, which is not always the case.

- Locale settings are global, calling setlocale is global. Your application or framework should set the locale when the process starts, and should not change it afterward.

The solution to sorting Unicode Text

The simplest solution for us is to use a Library, as always.

Pyuca is a pure Python implementation of the Unicode Collation Algorithm (UCA).

The UCA details how to compare two Unicode strings while remaining conformant to the requirements of the Unicode Standard.

import pyuca

coll = pyuca.Collator()

fruits = ['caju', 'atemoia', 'cajá', 'açaí', 'acerola']

sorted_fruits = sorted(fruits, key=coll.sort_key)

print(sorted_fruits)

# ['açaí', 'acerola', 'atemoia', 'cajá', 'caju']Further reading

This is a brief overview of Unicode Text and Bytes in Python. It’s a heavy subject that we cannot dive into all of it in just one article. So here’s a list of links that can help your research:

- The official “Unicode HOWTO” in the Python docs

- Chapter 2, “Strings and Text” of the book: The Python Cookbook, 3rd ed

- Nick Coghlan’s “Python Notes” blog has two posts very relevant to this chapter: “Python 3 and ASCII Compatible Binary Protocols” and “Processing Text Files in Python 3”. Highly recommended.

- List of encodings supported in Python.

- The book Unicode Explained by Jukka K. Korpel.

- The book Programming with Unicode.

- Chapter 4 of the book Fluent Python.